为Chromium实现MediaConfig API - 过程分享

背景

在实现实现任何功能前,一般都需要有数据统计支撑,作为收益验证的手段以及测试 Case 收集的手段。因此,在尝试实现 HEVC 硬解前,首先需要统计有多少视频是 HEVC 视频,并拿到视频的解码参数。

很不幸,浏览器层面并未给我们暴露出视频的解码信息,比如分辨率,编码,Profile,色彩空间。这些一系列的视频参数,对于前端都是无感知且无法感知的。

因此,在往常,如果需要获取视频信息做数据统计,首先需要拿到视频的二进制数据,在前端解析视频的元数据(比如 HEVC VPS、SPS、PPS),来提取视频参数。这样做,缺点是显而易见的,这种方式并不支持原生的 Video 标签播放的内容,且需要额外二次发起请求,且需要针对每种编码均实现一遍。

因此,如果能从 Renderer 层,以浏览器视角,监听视频的解码信息,那或许我们则不需要做任何 Trick 的操作?我在这个过程尝试探索了一下,并成功实现了这个诉求。

Media Internals 实现原理

Media Internals 简介

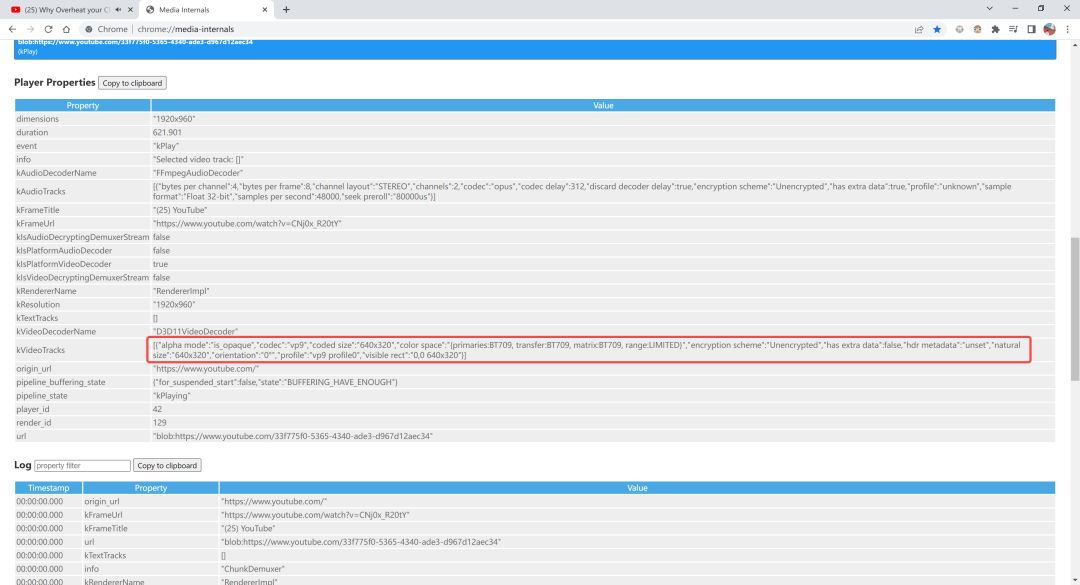

Chrome(包括 Chromium 内核的 Edge)实现了很多用于排查问题的 Debug 页面,比如我们常用的chrome://flags等等,那么对于音视频,是否也有这样的页面?答案是,有的,它叫作“Media Internal”,在你的浏览器输入chrome://media-internal,并随意打开一个有视频播放的页面,例如 youtube。

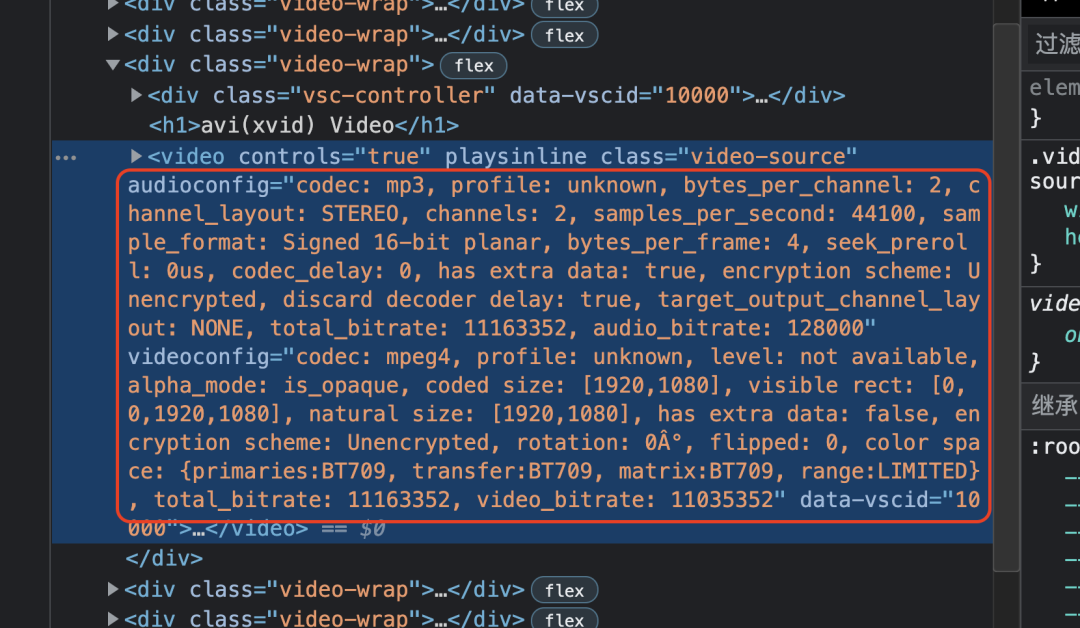

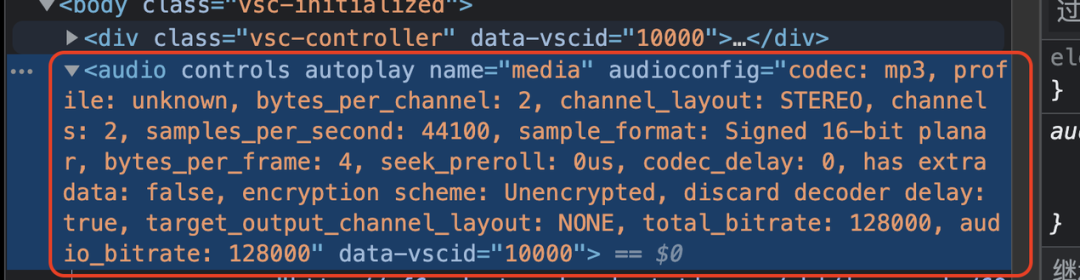

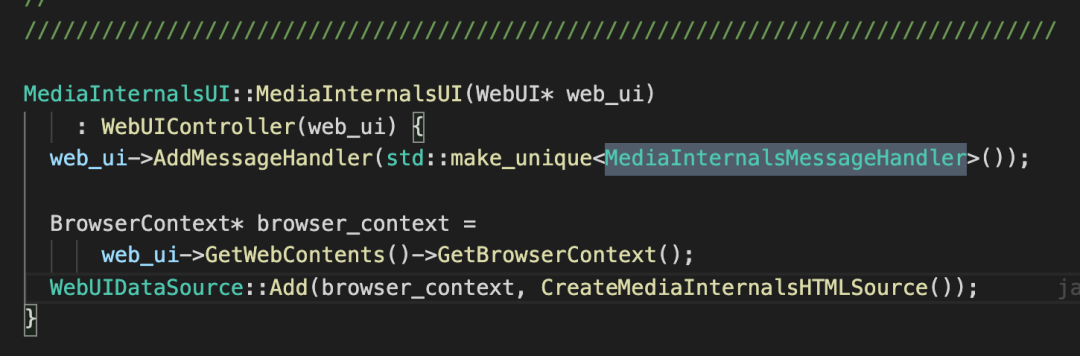

则你会在这个页面看到视频的一些内部解码信息,以及MediaLog, 经过一番观察可以看到,红色画圈部分是我们需要的,如果我们能在视频播放时直接从在渲染进程获取到这部分信息,则我们即可轻松实现数据收集功能。

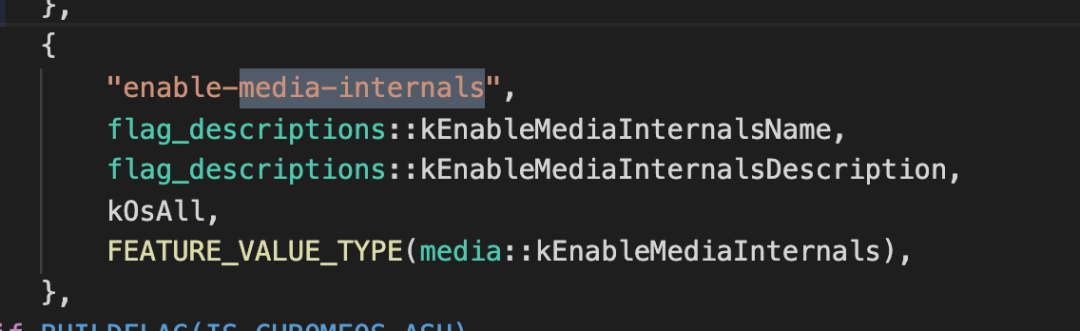

src/chrome/browser/about_flags.cc

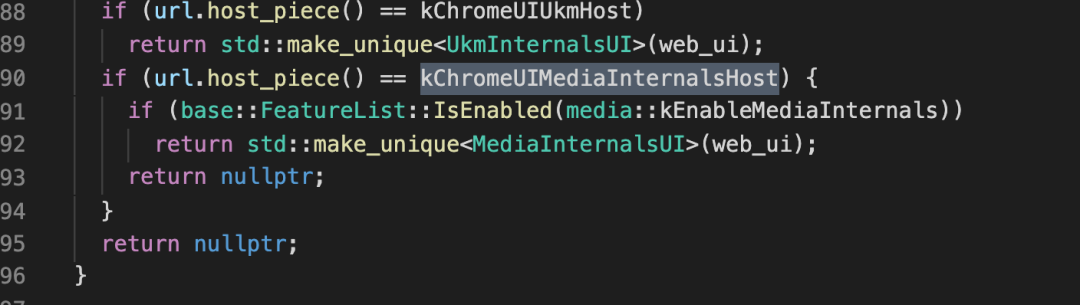

路由判断,并加载对应模板的代码

Chrome 内部,会根据 url.host_piece()是否为 kChromeUIMediaInternalsHost 且开启了 media Internal 功能,创建 MediaInternalsUI 实例:

src/content/browser/webui/content_web_ui_controller_factory.cc

Web-UI 的主进程模块加载

MediaInternalsMessageHandler实例监听后,执行CreateMediaInternalsHTMLSource(),即通过由 c++代码并渲染前端展示的 html

src/content/browser/media/media-internals-ui.cc

// 入口

WebUIDataSource* CreateMediaInternalsHTMLSource() {

// 创建一个叫`media-internal`的WebUIDataSource

WebUIDataSource* source =

WebUIDataSource::Create(kChromeUIMediaInternalsHost);

// 将资源的路径添加到source

source->UseStringsJs();

source->AddResourcePaths(

base::make_span(kMediaInternalsResources, kMediaInternalsResourcesSize));

// 设置默认入口为media_internals.html

source->SetDefaultResource(IDR_MEDIA_INTERNALS_MEDIA_INTERNALS_HTML);

return source;

}继续观察代码实现,可以看到,我们在 media-internals 页面拿到的内容,其实就是由这部分代码生成的:

VideoDecoderConfig的类,因此我们从 media-internals 实现得到了一个重要信息,那就是:只要能获取到VideoDecoderConfig,就可以实现我们要的功能。

// 拿到了这个,我们就拿到了想要的一切

struct VideoDecoderConfig {

VideoCodec codec;

VideoCodecProfile profile;

uint32 level;

bool has_alpha;

VideoTransformation transformation;

gfx.mojom.Size coded_size;

gfx.mojom.Rect visible_rect;

gfx.mojom.Size natural_size;

array<uint8> extra_data;

EncryptionScheme encryption_scheme;

VideoColorSpace color_space_info;

gfx.mojom.HDRMetadata? hdr_metadata;

};类似的,其实音频的信息,在 Chromium 内同样有一个类似的类,叫做AudioDecoderConfig。因此实现目标变为:拿到**VideoDecoderConfig**以及**AudioDecoderConfig**。

媒体编码信息获取 API 实现

通过搜索 Chromium 的代码,我们发现,对于普通视频,媒体信息的获取是在主进程通过 FFMpegDemuxer 解析得到,MSE 视频则是利用 ChunkDemuxer 解析得到,并最终利用 Mojo 进行 IPC 通信传输到 Renderer 进程,OK,这说明理论上该操作是可行的。

设计 MediaConfig API

在实现前,首先需要思考,应该以何种形式拿到视频的信息,并优雅暴露到前端,这里我能想到的最好办法是直接在 HTMLMediaElement 类实现我们要的 API,这样最终不管是 video 标签还是 audio 标签,都可以拿到音视频编码信息,这里先放上最终设计好后的 IDL:

interface MediaDecoderConfig {

// 总比特率(暂时不支持ObjectURL)

readonly totalBitrate: number;

// 视频流总比特率(暂时不支持ObjectURL)

readonly videoBitrate: number;

// 视频编码

readonly videoCodec: string;

// 视频配置

readonly videoProfile: string;

// 视频级别

readonly videoLevel: string;

// 视频色彩空间原色

readonly videoColorSpacePrimaries: string;

// 视频色彩空间传递函数

readonly videoColorSpaceTransfer: string;

// 视频色彩空间矩阵

readonly videoColorSpaceMatrix: string;

// 视频色彩空间范围

readonly videoColorSpaceRange: string;

// 视频编码宽度

readonly videoCodedSizeWidth: number;

// 视频编码高度

readonly videoCodedSizeHeight: number;

// 视频原始宽度

readonly videoNaturalSizeWidth: number;

// 视频原始高度

readonly videoNaturalSizeHeight: number;

// 视频可使区X点

readonly videoVisibleRectX: number;

// 视频可使区X点

readonly videoVisibleRectY: number;

// 视频可使区宽度

readonly videoVisibleRectWidth: number;

// 视频可使区高度

readonly videoVisibleRectHeight: number;

// 视频是否加密

readonly videoEncryption: string;

// 视频HDR元数据-亮度范围

readonly videoHdrMetadataLuminanceRange: string;

// 视频HDR元数据-主阵列

readonly videoHdrMetadataPrimaries: string;

// 视频HDR元数据-MaxContentLightLevel

readonly videoHdrMetadataMaxContentLightLevel: number;

// 视频HDR元数据-MaxFrameAverageLightLevel

readonly videoHdrMetadataMaxFrameAverageLightLevel: number;

// 视频旋转角度

readonly videoTransformationRotation: string;

// 视频是否翻转

readonly videoTransformationFlipped: boolean;

// 视频是否有Alpha通道

readonly videoHasAlpha: boolean;

// 视频是否有(内部)额外数据,可忽略

readonly videoHasExtraData: boolean;

// 音频比特率

readonly audioBitrate: number;

// 音频编码

readonly audioCodec: string;

// 音频配置

readonly audioProfile: string;

// 音频每通道比特数

readonly audioBytesPerChannel: number;

// 音频通道格式

readonly audioChannelLayout: string;

// 音频通道数

readonly audioChannels: number;

// 音频采样速率

readonly audioSamplesPerSecond: number;

// 音频采样格式

readonly audioSampleFormat: string;

// 音频每帧字节数

readonly audioBytesPerFrame: number;

// 音频预滚

readonly audioSeekPreroll: string;

// 音频延迟

readonly audioCodecDelay: number;

// 音频是否加密

readonly audioEncryption: string;

// 音频输出通道格式

readonly audioTargetOutputChannelLayout: string;

// 音频是否应该忽略解码器延迟

readonly audioShouldDiscardDecoderDelay: boolean;

// 音频是否有(内部)额外数据,可忽略

readonly audioHasExtraData: boolean;

}

interface HTMLMediaElementEventMap extends HTMLElementEventMap {

mediaconfigchange: Event;

}

interface HTMLMediaElement extends HTMLElement {

// 视频配置属性,可在DOM查看

readonly videoConfig: string;

// 音频配置属性,可在DOM读取

readonly audioConfig: string;

// 当该事件触发时,可获取到视频配置

onmediaconfigchange: ((this: HTMLMediaElement, ev: Event) => any) | null;

// 获取音视频配置

getMediaConfig(): MediaDecoderConfig;

}监听音视频信息变化

通过观测发现,Chromium 在 Blink 的 WebMediaPlayerImpl 模块实现了 onMetadata 方法,当音视频信息变化时,会触发该方法,因此能实现我们要的 API 的本质实际上就是利用了 onMetadata 方法的触发

diff --git a/third_party/blink/renderer/platform/media/web_media_player_impl.cc b/third_party/blink/renderer/platform/media/web_media_player_impl.cc

index 18b3ef5fe312d..003b26e1d123f 100644

--- a/third_party/blink/renderer/platform/media/web_media_player_impl.cc

+++ b/third_party/blink/renderer/platform/media/web_media_player_impl.cc

@@ -1919,6 +1919,9 @@ void WebMediaPlayerImpl::OnMetadata(const media::PipelineMetadata& metadata) {

media_metrics_provider_->SetHasAudio(metadata.audio_decoder_config.codec());

RecordEncryptionScheme("Audio",

metadata.audio_decoder_config.encryption_scheme());

// 实现一个onAudioConfigChange方法,获取音频变化

+

+ if (client_)

+ client_->OnAudioConfigChange(metadata.audio_decoder_config);

}

if (HasVideo()) {

@@ -1926,6 +1929,9 @@ void WebMediaPlayerImpl::OnMetadata(const media::PipelineMetadata& metadata) {

RecordEncryptionScheme("Video",

metadata.video_decoder_config.encryption_scheme());

// 实现一个OnVideoConfigChange方法,获取视频变化

+ if (client_)

+ client_->OnVideoConfigChange(metadata.video_decoder_config);

+

if (overlay_enabled_) {

// SurfaceView doesn't support rotated video, so transition back if

// the video is now rotated. If `always_enable_overlays_`, we keep the

@@ -1948,6 +1954,9 @@ void WebMediaPlayerImpl::OnMetadata(const media::PipelineMetadata& metadata) {

}

}

// 实现最终的OnMediaConfigChange方法,用于记录是否含有音频或视频

+ if (client_)

+ client_->OnMediaConfigChange(HasVideo(), HasAudio());

+

if (observer_)

observer_->OnMetadataChanged(pipeline_metadata_);diff --git a/third_party/blink/public/platform/web_media_player_client.h b/third_party/blink/public/platform/web_media_player_client.h

index 55e80c1e54281..c6ef56e36c614 100644

--- a/third_party/blink/public/platform/web_media_player_client.h

+++ b/third_party/blink/public/platform/web_media_player_client.h

@@ -218,6 +218,13 @@ class BLINK_PLATFORM_EXPORT WebMediaPlayerClient {

// See https://wicg.github.io/video-rvfc/.

virtual void OnRequestVideoFrameCallback() {}

+ // On metadata ready and video config aquired

+ virtual void OnVideoConfigChange(const media::VideoDecoderConfig& video_config) = 0;

+ // On metadata ready and audio config aquired

+ virtual void OnAudioConfigChange(const media::AudioDecoderConfig& audio_config) = 0;

+ // On meteadate ready and audio/config aquired then fire event

+ virtual void OnMediaConfigChange(bool has_video, bool has_audio) = 0;

+

struct Features {

WebString id;

WebString width;在 HTMLMediaElement 实现 API

拿到音视频信息变化后,我们需要在 HTMLMediaElement 实现我们要的方法。

diff --git a/third_party/blink/renderer/core/html/media/html_media_element.cc b/third_party/blink/renderer/core/html/media/html_media_element.cc

index cfcb19002a16a..cde5b8b8cc4ac 100644

--- a/third_party/blink/renderer/core/html/media/html_media_element.cc

+++ b/third_party/blink/renderer/core/html/media/html_media_element.cc

@@ -29,6 +29,7 @@

#include <algorithm>

#include <limits>

+#include <string>

#include "base/auto_reset.h"

#include "base/debug/crash_logging.h"

#include "base/feature_list.h"

@@ -56,6 +57,7 @@

#include "third_party/blink/public/platform/web_media_player_source.h"

#include "third_party/blink/renderer/bindings/core/v8/script_controller.h"

#include "third_party/blink/renderer/bindings/core/v8/script_promise_resolver.h"

+#include "third_party/blink/renderer/bindings/core/v8/v8_binding_for_core.h"

#include "third_party/blink/renderer/core/core_initializer.h"

#include "third_party/blink/renderer/core/core_probes_inl.h"

#include "third_party/blink/renderer/core/css/media_list.h"

@@ -83,6 +85,7 @@

#include "third_party/blink/renderer/core/html/media/autoplay_policy.h"

#include "third_party/blink/renderer/core/html/media/html_media_element_controls_list.h"

#include "third_party/blink/renderer/core/html/media/media_controls.h"

+#include "third_party/blink/renderer/core/html/media/media_decoder_config.h"

#include "third_party/blink/renderer/core/html/media/media_error.h"

#include "third_party/blink/renderer/core/html/media/media_fragment_uri_parser.h"

#include "third_party/blink/renderer/core/html/media/media_source_attachment.h"

@@ -1539,6 +1542,24 @@ void HTMLMediaElement::DidAudioOutputSinkChanged(

observer->OnAudioOutputSinkChanged(hashed_device_id);

}

+void HTMLMediaElement::OnMediaConfigChange(bool has_video, bool has_audio) {

// 记录是否有音、视频配置

+ has_video_ = has_video;

+ has_audio_ = has_audio;

// 在HTMLMediaElement上Dispatch一个事件,通知前端

+ ScheduleEvent(event_type_names::kMediaconfigchange);

+}

+

+void HTMLMediaElement::OnVideoConfigChange(const media::VideoDecoderConfig& config) {

// 存储VideoDecoderConfig

+ video_config_ = config;

+ const std::string video_config = config.AsHumanReadableString();

// 在HTMLMediaElement上设置属性

+ setAttribute(html_names::kVideoconfigAttr, AtomicString(video_config.c_str()));

+}

+

+void HTMLMediaElement::OnAudioConfigChange(const media::AudioDecoderConfig& config) {

// 存储AudioDecoderConfig

+ audio_config_ = config;

+ const std::string audio_config = config.AsHumanReadableString();

// 在HTMLMediaElement上设置属性

+ setAttribute(html_names::kAudioconfigAttr, AtomicString(audio_config.c_str()));

+}

+

void HTMLMediaElement::SetMediaPlayerHostForTesting(

mojo::PendingAssociatedRemote<media::mojom::blink::MediaPlayerHost> host) {

media_player_host_remote_->Value().Bind(

@@ -2528,6 +2549,115 @@ bool HTMLMediaElement::Autoplay() const {

return FastHasAttribute(html_names::kAutoplayAttr);

}

// 为HTMLMediaElement实现的videoconfig属性,只读

+String HTMLMediaElement::videoConfig() const {

+ LOG(INFO) << __FUNCTION__ << "getVideoConfig";

+ return FastGetAttribute(html_names::kVideoconfigAttr).GetString();

+}

+

+ // 为HTMLMediaElement实现的videoconfig属性,只读

+String HTMLMediaElement::audioConfig() const {

+ LOG(INFO) << __FUNCTION__ << "getAudioConfig";

+ return FastGetAttribute(html_names::kAudioconfigAttr).GetString();

+}

+ // 这里是我们最终要实现的getMediaConfig API的实现函数,大体逻辑即

+ // 将音视频配置转换为Blink IDL支持的类型

+MediaDecoderConfig* HTMLMediaElement::getMediaConfig() {

+ MediaDecoderConfig* config = MakeGarbageCollected<MediaDecoderConfig>();

+

+ if (has_video_) {

+ const int total_bitrate = video_config_.GetHumanReadableTotalBitrate();

+ const int video_bitrate = video_config_.GetHumanReadableVideoBitrate();

+ const AtomicString video_codec = AtomicString(video_config_.GetHumanReadableCodecName().c_str());

+ const AtomicString video_profile = AtomicString(video_config_.GetHumanReadableProfile().c_str());

+ const AtomicString video_level = AtomicString(video_config_.GetHumanReadableLevel().c_str());

+ const AtomicString video_color_space_primaries = AtomicString(video_config_.GetHumanReadableColorSpacePrimaries().c_str());

+ const AtomicString video_color_space_transfer = AtomicString(video_config_.GetHumanReadableColorSpaceTransfer().c_str());

+ const AtomicString video_color_space_matrix = AtomicString(video_config_.GetHumanReadableColorSpaceMatrix().c_str());

+ const AtomicString video_color_space_range = AtomicString(video_config_.GetHumanReadableColorSpaceRange().c_str());

+ const float video_coded_size_width = video_config_.GetHumanReadableCodedSizeWidth();

+ const float video_coded_size_height = video_config_.GetHumanReadableCodedSizeHeight();

+ const float video_natural_size_width = video_config_.GetHumanReadableNaturalSizeWidth();

+ const float video_natural_size_height = video_config_.GetHumanReadableNaturalSizeHeight();

+ const float video_visible_rect_x = video_config_.GetHumanReadableVisibleRectX();

+ const float video_visible_rect_y = video_config_.GetHumanReadableVisibleRectY();

+ const float video_visible_rect_width = video_config_.GetHumanReadableVisibleRectWidth();

+ const float video_visible_rect_height = video_config_.GetHumanReadableVisibleRectHeight();

+ const AtomicString video_encryption = AtomicString(video_config_.GetHumanReadableEncryption().c_str());

+ const AtomicString video_hdr_metadata_luminance_range = AtomicString(video_config_.GetHumanReadableHDRMetadataLuminanceRange().c_str());

+ const AtomicString video_hdr_metadata_primaries = AtomicString(video_config_.GetHumanReadableHDRMetadataPrimaries().c_str());

+ const unsigned video_hdr_metadata_max_content_light_level = video_config_.GetHumanReadableHDRMetadataMaxContentLightLevel();

+ const unsigned video_hdr_metadata_max_frame_average_light_level = video_config_.GetHumanReadableHDRMetadataMaxFrameAverageLightLevel();

+ const AtomicString video_transformation_rotation = AtomicString(video_config_.GetHumanReadableTransformationRotation().c_str());

+ const bool video_transformation_flipped = video_config_.GetHumanReadableTransformationFlipped();

+ const bool video_has_alpha = video_config_.GetHumanReadableHasAlpha();

+ const bool video_has_extra_data = video_config_.GetHumanReadableHasExtraData();

+

+ config->SetVideoConfig(total_bitrate,

+ video_bitrate,

+ video_codec,

+ video_profile,

+ video_level,

+ video_color_space_primaries,

+ video_color_space_transfer,

+ video_color_space_matrix,

+ video_color_space_range,

+ video_coded_size_width,

+ video_coded_size_height,

+ video_natural_size_width,

+ video_natural_size_height,

+ video_visible_rect_x,

+ video_visible_rect_y,

+ video_visible_rect_width,

+ video_visible_rect_height,

+ video_encryption,

+ video_hdr_metadata_luminance_range,

+ video_hdr_metadata_primaries,

+ video_hdr_metadata_max_content_light_level,

+ video_hdr_metadata_max_frame_average_light_level,

+ video_transformation_rotation,

+ video_transformation_flipped,

+ video_has_alpha,

+ video_has_extra_data);

+ }

+

+ if (has_audio_) {

+ const int total_bitrate = audio_config_.GetHumanReadableTotalBitrate();

+ const int audio_bitrate = audio_config_.GetHumanReadableAudioBitrate();

、 // 知识点,std:string需要转换为AtomicString,才可暴露给前端

+ const AtomicString audio_codec = AtomicString(audio_config_.GetHumanReadableCodecName().c_str());

+ const AtomicString audio_profile = AtomicString(audio_config_.GetHumanReadableProfile().c_str());

+ const int audio_bytes_per_channel = audio_config_.GetHumanReadableBytesPerChannel();

+ const AtomicString audio_channel_layout = AtomicString(audio_config_.GetHumanReadableChannelLayout().c_str());

+ const int audio_channels = audio_config_.GetHumanReadableChannels();

+ const int audio_samples_per_second = audio_config_.GetHumanReadableSamplesPerSecond();

+ const AtomicString audio_sample_format = AtomicString(audio_config_.GetHumanReadableSampleFormat().c_str());

+ const int audio_bytes_per_frame = audio_config_.GetHumanReadableBytesPerFrame();

+ const AtomicString audio_seek_preroll = AtomicString(audio_config_.GetHumanReadableSeekPreroll().c_str());

+ const int audio_codec_delay = audio_config_.GetHumanReadableCodecDelay();

+ const AtomicString audio_encryption = AtomicString(audio_config_.GetHumanReadableEncryption().c_str());

+ const AtomicString audio_target_output_channel_layout = AtomicString(audio_config_.GetHumanReadableTargetOutputChannelLayout().c_str());

+ const bool audio_should_discard_decoder_delay = audio_config_.GetHumanReadableShouldDiscardDecoderDelay();

+ const bool audio_has_extra_data = audio_config_.GetHumanReadableHasExtraData();

+

+ config->SetAudioConfig(total_bitrate,

+ audio_bitrate,

+ audio_codec,

+ audio_profile,

+ audio_bytes_per_channel,

+ audio_channel_layout,

+ audio_channels,

+ audio_samples_per_second,

+ audio_sample_format,

+ audio_bytes_per_frame,

+ audio_seek_preroll,

+ audio_codec_delay,

+ audio_encryption,

+ audio_target_output_channel_layout,

+ audio_should_discard_decoder_delay,

+ audio_has_extra_data);

+ }

+

+ return config;

+}

+

String HTMLMediaElement::preload() const {

if (GetLoadType() == WebMediaPlayer::kLoadTypeMediaStream)

return PreloadTypeToString(WebMediaPlayer::kPreloadNone);diff --git a/third_party/blink/renderer/core/html/media/html_media_element.h b/third_party/blink/renderer/core/html/media/html_media_element.h

index 4af2fe7c1c04b..eb5d1956196c2 100644

--- a/third_party/blink/renderer/core/html/media/html_media_element.h

+++ b/third_party/blink/renderer/core/html/media/html_media_element.h

@@ -31,6 +31,7 @@

#include "base/time/time.h"

#include "base/timer/elapsed_timer.h"

+#include "media/base/audio_decoder.h"

#include "media/mojo/mojom/media_player.mojom-blink.h"

#include "third_party/abseil-cpp/absl/types/optional.h"

#include "third_party/blink/public/common/media/display_type.h"

@@ -56,6 +57,7 @@

#include "third_party/blink/renderer/platform/timer.h"

#include "third_party/blink/renderer/platform/weborigin/kurl.h"

#include "third_party/blink/renderer/platform/wtf/threading_primitives.h"

+#include "third_party/blink/renderer/core/html/media/media_decoder_config.h"

namespace cc {

class Layer;

@@ -93,6 +95,7 @@ class VideoTrack;

class VideoTrackList;

class WebInbandTextTrack;

class WebRemotePlaybackClient;

+class MediaDecoderConfig;

class CORE_EXPORT HTMLMediaElement

: public HTMLElement,

@@ -190,6 +193,10 @@ class CORE_EXPORT HTMLMediaElement

String EffectivePreload() const;

WebMediaPlayer::Preload EffectivePreloadType() const;

+ String videoConfig() const;

+ String audioConfig() const;

+ MediaDecoderConfig* getMediaConfig();

+

WebTimeRanges BufferedInternal() const;

TimeRanges* buffered() const;

void load();

@@ -254,6 +261,11 @@ class CORE_EXPORT HTMLMediaElement

void SetUserWantsControlsVisible(bool visible);

bool UserWantsControlsVisible() const;

+ void OnMediaConfigChange(bool has_video, bool has_audio) override;

+ void OnVideoConfigChange(const media::VideoDecoderConfig& video_config) override;

+ void OnAudioConfigChange(const media::AudioDecoderConfig& audio_config) override;

+

void TogglePlayState();

AudioTrackList& audioTracks();

@@ -663,6 +675,11 @@ class CORE_EXPORT HTMLMediaElement

KURL current_src_after_redirects_;

Member<MediaStreamDescriptor> src_object_;

+ bool has_video_ = false;

+ bool has_audio_ = false;

+ media::VideoDecoderConfig video_config_;

+ media::AudioDecoderConfig audio_config_;

+

// To prevent potential regression when extended by the MSE API, do not set

// |error_| outside of constructor and SetError().

Member<MediaError> error_;接着需要为我们新增的 API 定义 IDL:

diff --git a/third_party/blink/renderer/core/html/media/html_media_element.idl b/third_party/blink/renderer/core/html/media/html_media_element.idl

index a041c328f1dec..ed2d93a8f403f 100644

--- a/third_party/blink/renderer/core/html/media/html_media_element.idl

+++ b/third_party/blink/renderer/core/html/media/html_media_element.idl

@@ -46,6 +46,9 @@ enum CanPlayTypeResult { "" /* empty string */, "maybe", "probably" };

const unsigned short NETWORK_NO_SOURCE = 3;

[ImplementedAs=getNetworkState] readonly attribute unsigned short networkState;

[CEReactions] attribute DOMString preload;

+ readonly attribute DOMString videoConfig;

+ readonly attribute DOMString audioConfig;

+ MediaDecoderConfig getMediaConfig();

readonly attribute TimeRanges buffered;

void load();

[CallWith=ExecutionContext, HighEntropy, Measure] CanPlayTypeResult canPlayType(DOMString type);计算音视频的比特率

由于VideoDecoderConfig缺失了比特率这个重要的参数,为了收集到更详细的视频信息,我们还需要在 Demux 阶段计算一下音视频轨道的比特率。

diff --git a/third_party/blink/public/platform/web_media_player.h b/third_party/blink/public/platform/web_media_player.h

index 306f13ae8af00..da703e6e7bf1d 100644

--- a/third_party/blink/public/platform/web_media_player.h

+++ b/third_party/blink/public/platform/web_media_player.h

@@ -186,6 +186,8 @@ class WebMediaPlayer {

virtual void FlingingStarted() {}

virtual void FlingingStopped() {}

virtual void SetPreload(Preload) {}

+ virtual void SetVideoConfig(const AtomicString&) {}

+ virtual void SetAudioConfig(const AtomicString&) {}

virtual WebTimeRanges Buffered() const = 0;

virtual WebTimeRanges Seekable() const = 0;diff --git a/media/filters/ffmpeg_demuxer.h b/media/filters/ffmpeg_demuxer.h

index 8d4c66e7611ac..ec8893131bb66 100644

--- a/media/filters/ffmpeg_demuxer.h

+++ b/media/filters/ffmpeg_demuxer.h

@@ -123,6 +123,9 @@ class MEDIA_EXPORT FFmpegDemuxerStream : public DemuxerStream {

AudioDecoderConfig audio_decoder_config() override;

VideoDecoderConfig video_decoder_config() override;

+ void SetAudioBitrate(int audio_bitrate, int bitrate);

+ void SetVideoBitrate(int video_bitrate, int bitrate);

+

bool IsEnabled() const;

void SetEnabled(bool enabled, base::TimeDelta timestamp);由于音视频可能有多个流,因此需要将流的比特率加和。

diff --git a/media/filters/ffmpeg_demuxer.cc b/media/filters/ffmpeg_demuxer.cc

index a76be9a01c5be..47ded334541aa 100644

--- a/media/filters/ffmpeg_demuxer.cc

+++ b/media/filters/ffmpeg_demuxer.cc

@@ -830,6 +830,20 @@ VideoDecoderConfig FFmpegDemuxerStream::video_decoder_config() {

return *video_config_;

}

// 记录音频总比特率和音视频总比特率

+void FFmpegDemuxerStream::SetAudioBitrate(int audio_bitrate, int bitrate) {

+ if (audio_config_->IsValidConfig()) {

+ audio_config_->SetAudioStreamBitrate(audio_bitrate);

+ audio_config_->SetTotalStreamBitrate(bitrate);

+ }

+}

+ // 记录视频总比特率和音视频总比特率

+void FFmpegDemuxerStream::SetVideoBitrate(int video_bitrate, int bitrate) {

+ if (video_config_->IsValidConfig()) {

+ video_config_->SetVideoStreamBitrate(video_bitrate);

+ video_config_->SetTotalStreamBitrate(bitrate);

+ }

+}

+

bool FFmpegDemuxerStream::IsEnabled() const {

DCHECK(task_runner_->RunsTasksInCurrentSequence());

return is_enabled_;

@@ -1236,6 +1250,40 @@ static int CalculateBitrate(AVFormatContext* format_context,

return base::ClampRound(filesize_in_bytes * duration.ToHz() * 8);

}

// 计算音频总比特率的逻辑

+static int CalculateAudioBitrate(AVFormatContext* format_context) {

+ int audio_stream_bitrate = 0;

+

+ for (size_t i = 0; i < format_context->nb_streams; ++i) {

+ AVCodecParameters* codec_parameters = format_context->streams[i]->codecpar;

+ AVMediaType codec_type = codec_parameters->codec_type;

+ if (codec_type == AVMEDIA_TYPE_AUDIO) {

+ audio_stream_bitrate += codec_parameters->bit_rate;

+ }

+ }

+

+ if (audio_stream_bitrate > 0)

+ return audio_stream_bitrate;

+

+ return 0;

+}

+ // 计算视频总比特率的逻辑

+static int CalculateVideoBitrate(AVFormatContext* format_context) {

+ int video_stream_bitrate = 0;

+

+ for (size_t i = 0; i < format_context->nb_streams; ++i) {

+ AVCodecParameters* codec_parameters = format_context->streams[i]->codecpar;

+ AVMediaType codec_type = codec_parameters->codec_type;

+ if (codec_type == AVMEDIA_TYPE_VIDEO) {

+ video_stream_bitrate += codec_parameters->bit_rate;

+ }

+ }

+

+ if (video_stream_bitrate > 0)

+ return video_stream_bitrate;

+

+ return 0;

+}

+

void FFmpegDemuxer::OnOpenContextDone(bool result) {

DCHECK(task_runner_->RunsTasksInCurrentSequence());

if (stopped_) {

@@ -1563,6 +1611,20 @@ void FFmpegDemuxer::OnFindStreamInfoDone(int result) {

if (bitrate_ > 0)

data_source_->SetBitrate(bitrate_);

+ int video_stream_bitrate = CalculateVideoBitrate(format_context);

+ int audio_stream_bitrate = CalculateAudioBitrate(format_context);

+ int total_stream_bitrate = video_stream_bitrate + audio_stream_bitrate;

+

+ for (const auto& stream : streams_) {

+ if (stream) {

+ if (stream->type() == DemuxerStream::AUDIO) {

+ stream->SetAudioBitrate(audio_stream_bitrate, total_stream_bitrate);

+ } else if (stream->type() == DemuxerStream::VIDEO) {

+ stream->SetVideoBitrate(video_stream_bitrate, total_stream_bitrate);

+ }

+ }

+ }

+

LogMetadata(format_context, max_duration);

media_tracks_updated_cb_.Run(std::move(media_tracks));扩展 VideoDecoderConfig

我们需要将VideoDecoderConfig的属性提取成我们需要的类型,且能支持我们保存比特率,因此这里需要的额外扩展一下 DecoderConfig:

diff --git a/media/base/video_decoder_config.cc b/media/base/video_decoder_config.cc

index 40c9707fae4d8..d1b311003b975 100644

--- a/media/base/video_decoder_config.cc

+++ b/media/base/video_decoder_config.cc

// 将我们要的参数转换为我们要的格式

@@ -106,6 +106,11 @@ std::string VideoDecoderConfig::AsHumanReadableString() const {

<< ", rotation: " << VideoRotationToString(video_transformation().rotation)

<< ", flipped: " << video_transformation().mirrored

<< ", color space: " << color_space_info().ToGfxColorSpace().ToString();

+

+ if (totalStreamBitrate() > 0) {

+ s << ", total_bitrate: " << base::NumberToString(totalStreamBitrate())

+ << ", video_bitrate: " << base::NumberToString(videoStreamBitrate());

+ }

if (hdr_metadata().has_value()) {

s << std::setprecision(4) << ", luminance range: "

@@ -129,10 +134,158 @@ std::string VideoDecoderConfig::AsHumanReadableString() const {

return s.str();

}

+int VideoDecoderConfig::GetHumanReadableTotalBitrate() const {

+ return totalStreamBitrate();

+}

+

+int VideoDecoderConfig::GetHumanReadableVideoBitrate() const {

+ return videoStreamBitrate();

+}

+

std::string VideoDecoderConfig::GetHumanReadableCodecName() const {

return GetCodecName(codec());

}

+std::string VideoDecoderConfig::GetHumanReadableProfile() const {

+ return GetProfileName(profile());

+}

+

+std::string VideoDecoderConfig::GetHumanReadableLevel() const {

+ return level() > kNoVideoCodecLevel ? base::NumberToString(level()) : "not available";

+}

+

+std::string VideoDecoderConfig::GetHumanReadableColorSpacePrimaries() const {

+ return color_space_info().ToGfxColorSpace().GetPrimariesString();

+}

+

+std::string VideoDecoderConfig::GetHumanReadableColorSpaceTransfer() const {

+ return color_space_info().ToGfxColorSpace().GetTransferString();

+}

+

+std::string VideoDecoderConfig::GetHumanReadableColorSpaceMatrix() const {

+ return color_space_info().ToGfxColorSpace().GetMatrixString();

+}

+

+std::string VideoDecoderConfig::GetHumanReadableColorSpaceRange() const {

+ return color_space_info().ToGfxColorSpace().GetRangeString();

+}

+

+float VideoDecoderConfig::GetHumanReadableCodedSizeWidth() const {

+ return coded_size().width();

+}

+

+float VideoDecoderConfig::GetHumanReadableCodedSizeHeight() const {

+ return coded_size().height();

+}

+

+float VideoDecoderConfig::GetHumanReadableNaturalSizeWidth() const {

+ return natural_size().width();

+}

+

+float VideoDecoderConfig::GetHumanReadableNaturalSizeHeight() const {

+ return natural_size().height();

+}

+

+float VideoDecoderConfig::GetHumanReadableVisibleRectX() const {

+ return visible_rect().x();

+}

+

+float VideoDecoderConfig::GetHumanReadableVisibleRectY() const {

+ return visible_rect().y();

+}

+

+float VideoDecoderConfig::GetHumanReadableVisibleRectWidth() const {

+ return visible_rect().width();

+}

+

+float VideoDecoderConfig::GetHumanReadableVisibleRectHeight() const {

+ return visible_rect().height();

+}

+

+bool VideoDecoderConfig::GetHumanReadableHasAlpha() const {

+ return alpha_mode() == AlphaMode::kHasAlpha ? true : false;

+}

+

+bool VideoDecoderConfig::GetHumanReadableHasExtraData() const {

+ return extra_data().empty() ? false : true;

+}

+

+bool VideoDecoderConfig::GetHumanReadableTransformationFlipped() const {

+ return video_transformation().mirrored;

+}

+

+std::string VideoDecoderConfig::GetHumanReadableTransformationRotation() const {

+ return VideoRotationToString(video_transformation().rotation);

+}

+

+std::string VideoDecoderConfig::GetHumanReadableEncryption() const {

+ std::ostringstream s;

+ s << encryption_scheme();

+ return s.str();

+}

+

+std::string VideoDecoderConfig::GetHumanReadableHDRMetadataLuminanceRange() const {

+ if (hdr_metadata().has_value()) {

+ std::ostringstream s;

+ s << hdr_metadata()->color_volume_metadata.luminance_min << "-"

+ << hdr_metadata()->color_volume_metadata.luminance_max;

+ return s.str();

+ } else {

+ return "";

+ }

+}

+

+std::string VideoDecoderConfig::GetHumanReadableHDRMetadataPrimaries() const {

+ if (hdr_metadata().has_value()) {

+ std::ostringstream s;

+ s << std::setprecision(4)

+ << "r("

+ << hdr_metadata()->color_volume_metadata.primary_r.x()

+ << ","

+ << hdr_metadata()->color_volume_metadata.primary_r.y()

+ << ") g("

+ << hdr_metadata()->color_volume_metadata.primary_g.x()

+ << ","

+ << hdr_metadata()->color_volume_metadata.primary_g.y()

+ << ") b("

+ << hdr_metadata()->color_volume_metadata.primary_b.x()

+ << ","

+ << hdr_metadata()->color_volume_metadata.primary_b.y()

+ << ") wp("

+ << hdr_metadata()->color_volume_metadata.white_point.x()

+ << ","

+ << hdr_metadata()->color_volume_metadata.white_point.y()

+ << ")";

+ return s.str();

+ } else {

+ return "";

+ }

+}

+

+unsigned VideoDecoderConfig::GetHumanReadableHDRMetadataMaxContentLightLevel() const {

+ if (hdr_metadata().has_value()) {

+ return hdr_metadata()->max_content_light_level;

+ } else {

+ return 0;

+ }

+}

+

+unsigned VideoDecoderConfig::GetHumanReadableHDRMetadataMaxFrameAverageLightLevel() const {

+ if (hdr_metadata().has_value()) {

+ return hdr_metadata()->max_frame_average_light_level;

+ } else {

+ return 0;

+ }

+}

+

+void VideoDecoderConfig::SetTotalStreamBitrate(int total_stream_bitrate) {

+ total_stream_bitrate_ = total_stream_bitrate;

+}

+

+void VideoDecoderConfig::SetVideoStreamBitrate(int video_stream_bitrate) {

+ video_stream_bitrate_ = video_stream_bitrate;

+}

+

void VideoDecoderConfig::SetExtraData(const std::vector<uint8_t>& extra_data) {

extra_data_ = extra_data;

}diff --git a/media/base/video_decoder_config.h b/media/base/video_decoder_config.h

index 1eb9bf07c46b7..59f0b1de48e87 100644

--- a/media/base/video_decoder_config.h

+++ b/media/base/video_decoder_config.h

@@ -74,7 +74,32 @@ class MEDIA_EXPORT VideoDecoderConfig {

// Returns a human-readable string describing |*this|.

std::string AsHumanReadableString() const;

+ int GetHumanReadableTotalBitrate() const;

+ int GetHumanReadableVideoBitrate() const;

std::string GetHumanReadableCodecName() const;

+ std::string GetHumanReadableProfile() const;

+ std::string GetHumanReadableLevel() const;

+ std::string GetHumanReadableColorSpacePrimaries() const;

+ std::string GetHumanReadableColorSpaceTransfer() const;

+ std::string GetHumanReadableColorSpaceMatrix() const;

+ std::string GetHumanReadableColorSpaceRange() const;

+ float GetHumanReadableCodedSizeWidth() const;

+ float GetHumanReadableCodedSizeHeight() const;

+ float GetHumanReadableNaturalSizeWidth() const;

+ float GetHumanReadableNaturalSizeHeight() const;

+ float GetHumanReadableVisibleRectX() const;

+ float GetHumanReadableVisibleRectY() const;

+ float GetHumanReadableVisibleRectWidth() const;

+ float GetHumanReadableVisibleRectHeight() const;

+ bool GetHumanReadableHasAlpha() const;

+ bool GetHumanReadableHasExtraData() const;

+ bool GetHumanReadableTransformationFlipped() const;

+ std::string GetHumanReadableTransformationRotation() const;

+ std::string GetHumanReadableEncryption() const;

+ std::string GetHumanReadableHDRMetadataLuminanceRange() const;

+ std::string GetHumanReadableHDRMetadataPrimaries() const;

+ unsigned GetHumanReadableHDRMetadataMaxContentLightLevel() const;

+ unsigned GetHumanReadableHDRMetadataMaxFrameAverageLightLevel() const;

VideoCodec codec() const { return codec_; }

VideoCodecProfile profile() const { return profile_; }

@@ -155,6 +180,12 @@ class MEDIA_EXPORT VideoDecoderConfig {

// useful for decryptors that decrypts an encrypted stream to a clear stream.

void SetIsEncrypted(bool is_encrypted);

+ // Sets/Gets the audio + video / video bitrate

+ int totalStreamBitrate() const { return total_stream_bitrate_; }

+ void SetTotalStreamBitrate(int total_stream_bitrate);

+ int videoStreamBitrate() const { return video_stream_bitrate_; }

+ void SetVideoStreamBitrate(int video_stream_bitrate);

+

// Sets whether this config is for WebRTC or not.

void set_is_rtc(bool is_rtc) { is_rtc_ = is_rtc; }

bool is_rtc() const { return is_rtc_; }

@@ -186,6 +217,9 @@ class MEDIA_EXPORT VideoDecoderConfig {

absl::optional<gfx::HDRMetadata> hdr_metadata_;

bool is_rtc_ = false;

+ int total_stream_bitrate_ = 0;

+ int video_stream_bitrate_ = 0;

+

// Not using DISALLOW_COPY_AND_ASSIGN here intentionally to allow the compiler

// generated copy constructor and assignment operator. Since the extra data is

// typically small, the performance impact is minimal.扩展 AudioDecoderConfig

我们需要将AudioDecoderConfig的属性提取成我们需要的类型,且能支持我们保存比特率,因此这里需要的额外扩展一下 DecoderConfig:

diff --git a/media/base/audio_decoder_config.cc b/media/base/audio_decoder_config.cc

index 1fcbb4119aa35..7c5d9056a5b6d 100644

--- a/media/base/audio_decoder_config.cc

+++ b/media/base/audio_decoder_config.cc

@@ -5,6 +5,7 @@

#include "media/base/audio_decoder_config.h"

#include "base/logging.h"

+#include "base/strings/string_number_conversions.h"

#include "media/base/limits.h"

#include "media/base/media_util.h"

@@ -99,9 +100,91 @@ std::string AudioDecoderConfig::AsHumanReadableString() const {

<< (should_discard_decoder_delay() ? "true" : "false")

<< ", target_output_channel_layout: "

<< ChannelLayoutToString(target_output_channel_layout());

+

+ if (totalStreamBitrate() > 0) {

+ s << ", total_bitrate: " << base::NumberToString(totalStreamBitrate())

+ << ", audio_bitrate: " << base::NumberToString(audioStreamBitrate());

+ }

+

+ return s.str();

+}

+

+int AudioDecoderConfig::GetHumanReadableTotalBitrate() const {

+ return totalStreamBitrate();

+}

+

+int AudioDecoderConfig::GetHumanReadableAudioBitrate() const {

+ return audioStreamBitrate();

+}

+

+std::string AudioDecoderConfig::GetHumanReadableCodecName() const {

+ return GetCodecName(codec());

+}

+

+std::string AudioDecoderConfig::GetHumanReadableProfile() const {

+ return GetProfileName(profile());

+}

+

+int AudioDecoderConfig::GetHumanReadableBytesPerChannel() const {

+ return bytes_per_channel();

+}

+

+std::string AudioDecoderConfig::GetHumanReadableChannelLayout() const {

+ return ChannelLayoutToString(channel_layout());

+}

+

+int AudioDecoderConfig::GetHumanReadableChannels() const {

+ return channels();

+}

+

+int AudioDecoderConfig::GetHumanReadableSamplesPerSecond() const {

+ return samples_per_second();

+}

+

+std::string AudioDecoderConfig::GetHumanReadableSampleFormat() const {

+ return SampleFormatToString(sample_format());

+}

+

+int AudioDecoderConfig::GetHumanReadableBytesPerFrame() const {

+ return bytes_per_frame();

+}

+

+std::string AudioDecoderConfig::GetHumanReadableSeekPreroll() const {

+ std::ostringstream s;

+ s << seek_preroll().InMicroseconds() << "us";

+ return s.str();

+}

+

+int AudioDecoderConfig::GetHumanReadableCodecDelay() const {

+ return codec_delay();

+}

+

+std::string AudioDecoderConfig::GetHumanReadableEncryption() const {

+ std::ostringstream s;

+ s << encryption_scheme();

return s.str();

}

+std::string AudioDecoderConfig::GetHumanReadableTargetOutputChannelLayout() const {

+ return ChannelLayoutToString(target_output_channel_layout());

+}

+

+bool AudioDecoderConfig::GetHumanReadableHasExtraData() const {

+ return extra_data().empty() ? false : true;

+}

+

+bool AudioDecoderConfig::GetHumanReadableShouldDiscardDecoderDelay() const {

+ return should_discard_decoder_delay() ? true : false;

+}

+

+void AudioDecoderConfig::SetTotalStreamBitrate(int total_stream_bitrate) {

+ total_stream_bitrate_ = total_stream_bitrate;

+}

+

+void AudioDecoderConfig::SetAudioStreamBitrate(int audio_stream_bitrate) {

+ audio_stream_bitrate_ = audio_stream_bitrate;

+}

+

void AudioDecoderConfig::SetChannelsForDiscrete(int channels) {

DCHECK(channel_layout_ == CHANNEL_LAYOUT_DISCRETE ||

channels == ChannelLayoutToChannelCount(channel_layout_));diff --git a/media/base/audio_decoder_config.h b/media/base/audio_decoder_config.h

index 1ec4ed073b995..c3f3a984a7735 100644

--- a/media/base/audio_decoder_config.h

+++ b/media/base/audio_decoder_config.h

@@ -62,6 +62,30 @@ class MEDIA_EXPORT AudioDecoderConfig {

// Returns a human-readable string describing |*this|.

std::string AsHumanReadableString() const;

+ // Returns a human-readable string for media decoder config

+ int GetHumanReadableTotalBitrate() const;

+ int GetHumanReadableAudioBitrate() const;

+ std::string GetHumanReadableCodecName() const;

+ std::string GetHumanReadableProfile() const;

+ int GetHumanReadableBytesPerChannel() const;

+ std::string GetHumanReadableChannelLayout() const;

+ int GetHumanReadableChannels() const;

+ int GetHumanReadableSamplesPerSecond() const;

+ std::string GetHumanReadableSampleFormat() const;

+ int GetHumanReadableBytesPerFrame() const;

+ std::string GetHumanReadableSeekPreroll() const;

+ int GetHumanReadableCodecDelay() const;

+ std::string GetHumanReadableEncryption() const;

+ std::string GetHumanReadableTargetOutputChannelLayout() const;

+ bool GetHumanReadableShouldDiscardDecoderDelay() const;

+ bool GetHumanReadableHasExtraData() const;

+

+ // Sets/Gets the video + audio bitrate and audio bitrate

+ int totalStreamBitrate() const { return total_stream_bitrate_; }

+ void SetTotalStreamBitrate(int total_stream_bitrate);

+ int audioStreamBitrate() const { return audio_stream_bitrate_; }

+ void SetAudioStreamBitrate(int audio_stream_bitrate);

+

// Sets the number of channels if |channel_layout_| is CHANNEL_LAYOUT_DISCRETE

void SetChannelsForDiscrete(int channels);

@@ -161,6 +185,10 @@ class MEDIA_EXPORT AudioDecoderConfig {

// be manually set in `SetChannelsForDiscrete()`;

int channels_ = 0;

+ // The bitrate of audio + video / audio

+ int total_stream_bitrate_ = 0;

+ int audio_stream_bitrate_ = 0;

+

// Not using DISALLOW_COPY_AND_ASSIGN here intentionally to allow the compiler

// generated copy constructor and assignment operator. Since the extra data is

// typically small, the performance impact is minimal.扩展 ColorSpace

我们需要将ColorSpace的属性提取成我们需要的类型,因此这里需要的额外扩展一下:

diff --git a/ui/gfx/color_space.cc b/ui/gfx/color_space.cc

index 02a4feec4481c..9d693abbf82fe 100644

--- a/ui/gfx/color_space.cc

+++ b/ui/gfx/color_space.cc

@@ -567,6 +567,151 @@ std::string ColorSpace::ToString() const {

return ss.str();

}

+std::string ColorSpace::GetPrimariesString() const {

+ std::stringstream ss;

+ ss << std::fixed << std::setprecision(4);

+ switch (primaries_) {

+ PRINT_ENUM_CASE(PrimaryID, INVALID)

+ PRINT_ENUM_CASE(PrimaryID, BT709)

+ PRINT_ENUM_CASE(PrimaryID, BT470M)

+ PRINT_ENUM_CASE(PrimaryID, BT470BG)

+ PRINT_ENUM_CASE(PrimaryID, SMPTE170M)

+ PRINT_ENUM_CASE(PrimaryID, SMPTE240M)

+ PRINT_ENUM_CASE(PrimaryID, FILM)

+ PRINT_ENUM_CASE(PrimaryID, BT2020)

+ PRINT_ENUM_CASE(PrimaryID, SMPTEST428_1)

+ PRINT_ENUM_CASE(PrimaryID, SMPTEST431_2)

+ PRINT_ENUM_CASE(PrimaryID, SMPTEST432_1)

+ PRINT_ENUM_CASE(PrimaryID, XYZ_D50)

+ PRINT_ENUM_CASE(PrimaryID, ADOBE_RGB)

+ PRINT_ENUM_CASE(PrimaryID, APPLE_GENERIC_RGB)

+ PRINT_ENUM_CASE(PrimaryID, WIDE_GAMUT_COLOR_SPIN)

+ case PrimaryID::CUSTOM:

+ // |custom_primary_matrix_| is in row-major order.

+ const float sum_R = custom_primary_matrix_[0] +

+ custom_primary_matrix_[3] + custom_primary_matrix_[6];

+ const float sum_G = custom_primary_matrix_[1] +

+ custom_primary_matrix_[4] + custom_primary_matrix_[7];

+ const float sum_B = custom_primary_matrix_[2] +

+ custom_primary_matrix_[5] + custom_primary_matrix_[8];

+ if (IsAlmostZero(sum_R) || IsAlmostZero(sum_G) || IsAlmostZero(sum_B))

+ break;

+

+ ss << "[[" << (custom_primary_matrix_[0] / sum_R)

+ << ", " << (custom_primary_matrix_[3] / sum_R) << "], "

+ << " [" << (custom_primary_matrix_[1] / sum_G) << ", "

+ << (custom_primary_matrix_[4] / sum_G) << "], "

+ << " [" << (custom_primary_matrix_[2] / sum_B) << ", "

+ << (custom_primary_matrix_[5] / sum_B) << "]]";

+ break;

+ }

+

+ return ss.str();

+}

+

+std::string ColorSpace::GetTransferString() const {

+ std::stringstream ss;

+ ss << std::fixed << std::setprecision(4);

+ switch (transfer_) {

+ PRINT_ENUM_CASE(TransferID, INVALID)

+ PRINT_ENUM_CASE(TransferID, BT709)

+ PRINT_ENUM_CASE(TransferID, BT709_APPLE)

+ PRINT_ENUM_CASE(TransferID, GAMMA18)

+ PRINT_ENUM_CASE(TransferID, GAMMA22)

+ PRINT_ENUM_CASE(TransferID, GAMMA24)

+ PRINT_ENUM_CASE(TransferID, GAMMA28)

+ PRINT_ENUM_CASE(TransferID, SMPTE170M)

+ PRINT_ENUM_CASE(TransferID, SMPTE240M)

+ PRINT_ENUM_CASE(TransferID, LINEAR)

+ PRINT_ENUM_CASE(TransferID, LOG)

+ PRINT_ENUM_CASE(TransferID, LOG_SQRT)

+ PRINT_ENUM_CASE(TransferID, IEC61966_2_4)

+ PRINT_ENUM_CASE(TransferID, BT1361_ECG)

+ PRINT_ENUM_CASE(TransferID, IEC61966_2_1)

+ PRINT_ENUM_CASE(TransferID, BT2020_10)

+ PRINT_ENUM_CASE(TransferID, BT2020_12)

+ PRINT_ENUM_CASE(TransferID, SMPTEST428_1)

+ PRINT_ENUM_CASE(TransferID, IEC61966_2_1_HDR)

+ PRINT_ENUM_CASE(TransferID, LINEAR_HDR)

+ case TransferID::ARIB_STD_B67:

+ ss << "HLG (SDR white point ";

+ if (transfer_params_[0] == 0.f)

+ ss << "default " << kDefaultSDRWhiteLevel;

+ else

+ ss << transfer_params_[0];

+ ss << " nits)";

+ break;

+ case TransferID::SMPTEST2084:

+ ss << "PQ (SDR white point ";

+ if (transfer_params_[0] == 0.f)

+ ss << "default " << kDefaultSDRWhiteLevel;

+ else

+ ss << transfer_params_[0];

+ ss << " nits)";

+ break;

+ case TransferID::CUSTOM: {

+ skcms_TransferFunction fn;

+ GetTransferFunction(&fn);

+ ss << fn.c << "*x + " << fn.f << " if x < " << fn.d << " else (" << fn.a

+ << "*x + " << fn.b << ")**" << fn.g << " + " << fn.e;

+ break;

+ }

+ case TransferID::CUSTOM_HDR: {

+ skcms_TransferFunction fn;

+ GetTransferFunction(&fn);

+ if (fn.g == 1.0f && fn.a > 0.0f && fn.b == 0.0f && fn.c == 0.0f &&

+ fn.d == 0.0f && fn.e == 0.0f && fn.f == 0.0f) {

+ ss << "LINEAR_HDR (slope " << fn.a << ", SDR white point "

+ << kDefaultScrgbLinearSdrWhiteLevel / fn.a << " nits)";

+ break;

+ }

+ ss << fn.c << "*x + " << fn.f << " if |x| < " << fn.d << " else sign(x)*("

+ << fn.a << "*|x| + " << fn.b << ")**" << fn.g << " + " << fn.e;

+ break;

+ }

+ case TransferID::PIECEWISE_HDR: {

+ skcms_TransferFunction fn;

+ GetTransferFunction(&fn);

+ ss << "sRGB to 1 at " << transfer_params_[0] << ", linear to "

+ << transfer_params_[1] << " at 1";

+ break;

+ }

+ }

+ return ss.str();

+}

+

+std::string ColorSpace::GetMatrixString() const {

+ std::stringstream ss;

+ ss << std::fixed << std::setprecision(4);

+ switch (matrix_) {

+ PRINT_ENUM_CASE(MatrixID, INVALID)

+ PRINT_ENUM_CASE(MatrixID, RGB)

+ PRINT_ENUM_CASE(MatrixID, BT709)

+ PRINT_ENUM_CASE(MatrixID, FCC)

+ PRINT_ENUM_CASE(MatrixID, BT470BG)

+ PRINT_ENUM_CASE(MatrixID, SMPTE170M)

+ PRINT_ENUM_CASE(MatrixID, SMPTE240M)

+ PRINT_ENUM_CASE(MatrixID, YCOCG)

+ PRINT_ENUM_CASE(MatrixID, BT2020_NCL)

+ PRINT_ENUM_CASE(MatrixID, BT2020_CL)

+ PRINT_ENUM_CASE(MatrixID, YDZDX)

+ PRINT_ENUM_CASE(MatrixID, GBR)

+ }

+ return ss.str();

+}

+

+std::string ColorSpace::GetRangeString() const {

+ std::stringstream ss;

+ ss << std::fixed << std::setprecision(4);

+ switch (range_) {

+ PRINT_ENUM_CASE(RangeID, INVALID)

+ PRINT_ENUM_CASE(RangeID, LIMITED)

+ PRINT_ENUM_CASE(RangeID, FULL)

+ PRINT_ENUM_CASE(RangeID, DERIVED)

+ }

+ return ss.str();

+}

+

#undef PRINT_ENUM_CASE

ColorSpace ColorSpace::GetAsFullRangeRGB() const {diff --git a/ui/gfx/color_space.h b/ui/gfx/color_space.h

index 4c3f068c86877..aa4482b3c258e 100644

--- a/ui/gfx/color_space.h

+++ b/ui/gfx/color_space.h

@@ -262,6 +262,10 @@ class COLOR_SPACE_EXPORT ColorSpace {

bool operator<(const ColorSpace& other) const;

size_t GetHash() const;

std::string ToString() const;

+ std::string GetPrimariesString() const;

+ std::string GetTransferString() const;

+ std::string GetMatrixString() const;

+ std::string GetRangeString() const;

bool IsWide() const;定义 MediaDecoderConfig IDL

这里没什么内容,大部分时候就是都是字符串的搬运工,实现我们要的数据类型MediaDecoderConfig数据类型,即VideoDecoderConfig以及AudioDecoderConfig的合集。

+++ b/third_party/blink/renderer/core/html/media/media_decoder_config.cc

@@ -0,0 +1,173 @@

+

+#include "third_party/blink/renderer/core/html/media/media_decoder_config.h"

+

+// #include "third_party/blink/renderer/core/core_export.h"

+#include "third_party/blink/renderer/core/dom/document.h"

+#include "third_party/blink/renderer/core/frame/local_dom_window.h"

+#include "third_party/blink/renderer/core/timing/dom_window_performance.h"

+#include "third_party/blink/renderer/core/timing/window_performance.h"

+

+namespace blink {

+

+MediaDecoderConfig::MediaDecoderConfig() :

+ total_bitrate_(0),

+ video_bitrate_(0),

+ video_codec_(""),

+ video_profile_(""),

+ video_level_(""),

+ video_color_space_primaries_(""),

+ video_color_space_transfer_(""),

+ video_color_space_matrix_(""),

+ video_color_space_range_(""),

+ video_coded_size_width_(0),

+ video_coded_size_height_(0),

+ video_natural_size_width_(0),

+ video_natural_size_height_(0),

+ video_visible_rect_x_(0),

+ video_visible_rect_y_(0),

+ video_visible_rect_width_(0),

+ video_visible_rect_height_(0),

+ video_encryption_(""),

+ video_hdr_metadata_luminance_range_(""),

+ video_hdr_metadata_primaries_(""),

+ video_hdr_metadata_max_content_light_level_(0),

+ video_hdr_metadata_max_frame_average_light_level_(0),

+ video_transformation_rotation_(""),

+ video_transformation_flipped_(false),

+ video_has_alpha_(false),

+ video_has_extra_data_(false),

+ audio_bitrate_(0),

+ audio_codec_(""),

+ audio_profile_(""),

+ audio_bytes_per_channel_(0),

+ audio_channel_layout_(""),

+ audio_channels_(0),

+ audio_samples_per_second_(0),

+ audio_sample_format_(""),

+ audio_bytes_per_frame_(0),

+ audio_seek_preroll_(""),

+ audio_codec_delay_(0),

+ audio_encryption_(""),

+ audio_target_output_channel_layout_(""),

+ audio_should_discard_decoder_delay_(false),

+ audio_has_extra_data_(false) {}

+

+void MediaDecoderConfig::SetVideoConfig(int total_bitrate,

+ int video_bitrate,

+ AtomicString video_codec,

+ AtomicString video_profile,

+ AtomicString video_level,

+ AtomicString video_color_space_primaries,

+ AtomicString video_color_space_transfer,

+ AtomicString video_color_space_matrix,

+ AtomicString video_color_space_range,

+ float video_coded_size_width,

+ float video_coded_size_height,

+ float video_natural_size_width,

+ float video_natural_size_height,

+ float video_visible_rect_x,

+ float video_visible_rect_y,

+ float video_visible_rect_width,

+ float video_visible_rect_height,

+ AtomicString video_encryption,

+ AtomicString video_hdr_metadata_luminance_range,

+ AtomicString video_hdr_metadata_primaries,

+ unsigned video_hdr_metadata_max_content_light_level,

+ unsigned video_hdr_metadata_max_frame_average_light_level,

+ AtomicString video_transformation_rotation,

+ bool video_transformation_flipped,

+ bool video_has_alpha,

+ bool video_has_extra_data)

+ {

+ total_bitrate_ = total_bitrate;

+ video_bitrate_ = video_bitrate;

+ video_codec_ = video_codec;

+ video_profile_ = video_profile;

+ video_level_ = video_level;

+ video_color_space_primaries_ = video_color_space_primaries;

+ video_color_space_transfer_ = video_color_space_transfer;

+ video_color_space_matrix_ = video_color_space_matrix;

+ video_color_space_range_ = video_color_space_range;

+ video_coded_size_width_ = video_coded_size_width;

+ video_coded_size_height_ = video_coded_size_height;

+ video_natural_size_width_ = video_natural_size_width;

+ video_natural_size_height_ = video_natural_size_height;

+ video_visible_rect_x_ = video_visible_rect_x;

+ video_visible_rect_y_ = video_visible_rect_y;

+ video_visible_rect_width_ = video_visible_rect_width;

+ video_visible_rect_height_ = video_visible_rect_height;

+ video_encryption_ = video_encryption;

+ video_hdr_metadata_luminance_range_ = video_hdr_metadata_luminance_range;

+ video_hdr_metadata_primaries_ = video_hdr_metadata_primaries;

+ video_hdr_metadata_max_content_light_level_ = video_hdr_metadata_max_content_light_level;

+ video_hdr_metadata_max_frame_average_light_level_ = video_hdr_metadata_max_frame_average_light_level;

+ video_transformation_rotation_ = video_transformation_rotation;

+ video_transformation_flipped_ = video_transformation_flipped;

+ video_has_alpha_ = video_has_alpha;

+ video_has_extra_data_ = video_has_extra_data;

+ }

+

+void MediaDecoderConfig::SetAudioConfig(int total_bitrate,

+ int audio_bitrate,

+ AtomicString audio_codec,

+ AtomicString audio_profile,

+ int audio_bytes_per_channel,

+ AtomicString audio_channel_layout,

+ int audio_channels,

+ int audio_samples_per_second,

+ AtomicString audio_sample_format,

+ int audio_bytes_per_frame,

+ AtomicString audio_seek_preroll,

+ int audio_codec_delay,

+ AtomicString audio_encryption,

+ AtomicString audio_target_output_channel_layout,

+ bool audio_should_discard_decoder_delay,

+ bool audio_has_extra_data)

+ {

+ total_bitrate_ = total_bitrate;

+ audio_bitrate_ = audio_bitrate;

+ audio_codec_ = audio_codec;

+ audio_profile_ = audio_profile;

+ audio_bytes_per_channel_ = audio_bytes_per_channel;

+ audio_channel_layout_ = audio_channel_layout;

+ audio_channels_ = audio_channels;

+ audio_samples_per_second_ = audio_samples_per_second;

+ audio_sample_format_ = audio_sample_format;

+ audio_bytes_per_frame_ = audio_bytes_per_frame;

+ audio_seek_preroll_ = audio_seek_preroll;

+ audio_codec_delay_ = audio_codec_delay;

+ audio_encryption_ = audio_encryption;

+ audio_target_output_channel_layout_ = audio_target_output_channel_layout;

+ audio_should_discard_decoder_delay_ = audio_should_discard_decoder_delay;

+ audio_has_extra_data_ = audio_has_extra_data;

+ }

+} // namespace blinkdiff --git a/third_party/blink/renderer/core/html/media/media_decoder_config.h b/third_party/blink/renderer/core/html/media/media_decoder_config.h

new file mode 100644

index 0000000000000..942d209700909

--- /dev/null

+++ b/third_party/blink/renderer/core/html/media/media_decoder_config.h

@@ -0,0 +1,187 @@

+

+#ifndef THIRD_PARTY_BLINK_RENDERER_MODULES_MEDIA_DECODER_CONFIG_H_

+#define THIRD_PARTY_BLINK_RENDERER_MODULES_MEDIA_DECODER_CONFIG_H_

+

+#include "third_party/blink/renderer/platform/bindings/script_wrappable.h"

+#include "third_party/blink/renderer/platform/heap/handle.h"

+#include "third_party/blink/renderer/platform/wtf/text/wtf_string.h"

+

+namespace blink {

+

+class Document;

+

+class MediaDecoderConfig : public ScriptWrappable {

+ DEFINE_WRAPPERTYPEINFO();

+

+ public:

+ MediaDecoderConfig();

+ void SetVideoConfig(int total_bitrate,

+ int video_bitrate,

+ AtomicString video_codec,

+ AtomicString video_profile,

+ AtomicString video_level,

+ AtomicString video_color_space_primaries,

+ AtomicString video_color_space_transfer,

+ AtomicString video_color_space_matrix,

+ AtomicString video_color_space_range,

+ float video_coded_size_width,

+ float video_coded_size_height,

+ float video_natural_size_width,

+ float video_natural_size_height,

+ float video_visible_rect_x,

+ float video_visible_rect_y,

+ float video_visible_rect_width,

+ float video_visible_rect_height,

+ AtomicString video_encryption,

+ AtomicString video_hdr_metadata_luminance_range,

+ AtomicString video_hdr_metadata_primaries,

+ unsigned video_hdr_metadata_max_content_light_level,

+ unsigned video_hdr_metadata_max_frame_average_light_level,

+ AtomicString video_transformation_rotation,

+ bool video_transformation_flipped,

+ bool video_has_alpha,

+ bool video_has_extra_data);

+

+ void SetAudioConfig(int total_bitrate,

+ int audio_bitrate,

+ AtomicString audio_codec,

+ AtomicString audio_profile,

+ int audio_bytes_per_channel,

+ AtomicString audio_channel_layout,

+ int audio_channels,

+ int audio_samples_per_second,

+ AtomicString audio_sample_format,

+ int audio_bytes_per_frame,

+ AtomicString audio_seek_preroll,

+ int audio_codec_delay,

+ AtomicString audio_encryption,

+ AtomicString audio_target_output_channel_layout,

+ bool audio_should_discard_decoder_delay,

+ bool audio_has_extra_data);

+

+ int totalBitrate() const { return total_bitrate_; }

+ // video config

+ int videoBitrate() const { return video_bitrate_; }

+ AtomicString videoCodec() const { return video_codec_; }

+ AtomicString videoProfile() const { return video_profile_; }

+ AtomicString videoLevel() const { return video_level_; }

+ AtomicString videoColorSpacePrimaries() const { return video_color_space_primaries_; }

+ AtomicString videoColorSpaceTransfer() const { return video_color_space_transfer_; }

+ AtomicString videoColorSpaceMatrix() const { return video_color_space_matrix_; }

+ AtomicString videoColorSpaceRange() const { return video_color_space_range_; }

+ float videoCodedSizeWidth() const { return video_coded_size_width_; }

+ float videoCodedSizeHeight() const { return video_coded_size_height_; }

+ float videoNaturalSizeWidth() const { return video_natural_size_width_; }

+ float videoNaturalSizeHeight() const { return video_natural_size_height_; }

+ float videoVisibleRectX() const { return video_visible_rect_x_; }

+ float videoVisibleRectY() const { return video_visible_rect_y_; }

+ float videoVisibleRectWidth() const { return video_visible_rect_width_; }

+ float videoVisibleRectHeight() const { return video_visible_rect_height_; }

+ AtomicString videoEncryption() const { return video_encryption_; }

+ AtomicString videoHdrMetadataLuminanceRange() const { return video_hdr_metadata_luminance_range_; }

+ AtomicString videoHdrMetadataPrimaries() const { return video_hdr_metadata_primaries_; }

+ unsigned videoHdrMetadataMaxContentLightLevel() const { return video_hdr_metadata_max_content_light_level_; }

+ unsigned videoHdrMetadataMaxFrameAverageLightLevel() const { return video_hdr_metadata_max_frame_average_light_level_; }

+ AtomicString videoTransformationRotation() const { return video_transformation_rotation_; }

+ bool videoTransformationFlipped() const { return video_transformation_flipped_; }

+ bool videoHasAlpha() const { return video_has_alpha_; }

+ bool videoHasExtraData() const { return video_has_extra_data_; }

+

+

+ // audio config

+ int audioBitrate() const { return audio_bitrate_; }

+ AtomicString audioCodec() const { return audio_codec_; }

+ AtomicString audioProfile() const { return audio_profile_; }

+ int audioBytesPerChannel() const { return audio_bytes_per_channel_; }

+ AtomicString audioChannelLayout() const { return audio_channel_layout_; }

+ int audioChannels() const { return audio_channels_; }

+ int audioSamplesPerSecond() const { return audio_samples_per_second_; }

+ AtomicString audioSampleFormat() const { return audio_sample_format_; }

+ int audioBytesPerFrame() const { return audio_bytes_per_frame_; }

+ AtomicString audioSeekPreroll() const { return audio_seek_preroll_; }

+ int audioCodecDelay() const { return audio_codec_delay_; }

+ AtomicString audioEncryption() const { return audio_encryption_; }

+ AtomicString audioTargetOutputChannelLayout() const { return audio_target_output_channel_layout_; }

+ bool audioShouldDiscardDecoderDelay() const { return audio_should_discard_decoder_delay_; }

+ bool audioHasExtraData() const { return audio_has_extra_data_; }

+

+ private:

+ int total_bitrate_;

+

+ // video

+ int video_bitrate_;

+ AtomicString video_codec_;

+ AtomicString video_profile_;

+ AtomicString video_level_;

+ AtomicString video_color_space_primaries_;

+ AtomicString video_color_space_transfer_;

+ AtomicString video_color_space_matrix_;

+ AtomicString video_color_space_range_;

+ float video_coded_size_width_;

+ float video_coded_size_height_;

+ float video_natural_size_width_;

+ float video_natural_size_height_;

+ float video_visible_rect_x_;

+ float video_visible_rect_y_;

+ float video_visible_rect_width_;

+ float video_visible_rect_height_;

+ AtomicString video_encryption_;

+ AtomicString video_hdr_metadata_luminance_range_;

+ AtomicString video_hdr_metadata_primaries_;

+ unsigned video_hdr_metadata_max_content_light_level_;

+ unsigned video_hdr_metadata_max_frame_average_light_level_;

+ AtomicString video_transformation_rotation_;

+ bool video_transformation_flipped_;

+ bool video_has_alpha_;

+ bool video_has_extra_data_;

+

+ // audio

+ int audio_bitrate_;

+ AtomicString audio_codec_;

+ AtomicString audio_profile_;

+ int audio_bytes_per_channel_;

+ AtomicString audio_channel_layout_;

+ int audio_channels_;

+ int audio_samples_per_second_;

+ AtomicString audio_sample_format_;

+ int audio_bytes_per_frame_;

+ AtomicString audio_seek_preroll_;

+ int audio_codec_delay_;

+ AtomicString audio_encryption_;

+ AtomicString audio_target_output_channel_layout_;

+ bool audio_should_discard_decoder_delay_;

+ bool audio_has_extra_data_;

+};

+

+} // namespace blink

+

+#endif // THIRD_PARTY_BLINK_RENDERER_MODULES_MEDIA_DECODER_CONFIG_H_在 blink 实现任何数据结构,都需要定义好 IDL,IDL 的数据结构即前端访问时实际获取到的数据类型,这里后面会单独写个文档给大家介绍 IDL 的一些定义属性及背后的含义。

+++ b/third_party/blink/renderer/core/html/media/media_decoder_config.idl

@@ -0,0 +1,46 @@

+[Exposed=Window]

+interface MediaDecoderConfig {

+ readonly attribute unsigned long totalBitrate;

+

+ readonly attribute unsigned long videoBitrate;

+ readonly attribute DOMString videoCodec;

+ readonly attribute DOMString videoProfile;

+ readonly attribute DOMString videoLevel;

+ readonly attribute DOMString videoColorSpacePrimaries;

+ readonly attribute DOMString videoColorSpaceTransfer;

+ readonly attribute DOMString videoColorSpaceMatrix;

+ readonly attribute DOMString videoColorSpaceRange;

+ readonly attribute float videoCodedSizeWidth;

+ readonly attribute float videoCodedSizeHeight;

+ readonly attribute float videoNaturalSizeWidth;

+ readonly attribute float videoNaturalSizeHeight;

+ readonly attribute float videoVisibleRectX;

+ readonly attribute float videoVisibleRectY;

+ readonly attribute float videoVisibleRectWidth;

+ readonly attribute float videoVisibleRectHeight;

+ readonly attribute DOMString videoEncryption;

+ readonly attribute DOMString videoHdrMetadataLuminanceRange;

+ readonly attribute DOMString videoHdrMetadataPrimaries;

+ readonly attribute unsigned long videoHdrMetadataMaxContentLightLevel;

+ readonly attribute unsigned long videoHdrMetadataMaxFrameAverageLightLevel;

+ readonly attribute DOMString videoTransformationRotation;

+ readonly attribute boolean videoTransformationFlipped;

+ readonly attribute boolean videoHasAlpha;

+ readonly attribute boolean videoHasExtraData;

+

+ readonly attribute unsigned long audioBitrate;

+ readonly attribute DOMString audioCodec;

+ readonly attribute DOMString audioProfile;

+ readonly attribute unsigned long audioBytesPerChannel;

+ readonly attribute DOMString audioChannelLayout;

+ readonly attribute unsigned long audioChannels;

+ readonly attribute unsigned long audioSamplesPerSecond;

+ readonly attribute DOMString audioSampleFormat;

+ readonly attribute unsigned long audioBytesPerFrame;

+ readonly attribute DOMString audioSeekPreroll;

+ readonly attribute unsigned long audioCodecDelay;

+ readonly attribute DOMString audioEncryption;

+ readonly attribute DOMString audioTargetOutputChannelLayout;

+ readonly attribute boolean audioShouldDiscardDecoderDelay;

+ readonly attribute boolean audioHasExtraData;

+};接着将我们的新增文件引入:

diff --git a/third_party/blink/renderer/bindings/generated_in_core.gni b/third_party/blink/renderer/bindings/generated_in_core.gni

index dbd963f7118e5..d690cec5e216d 100644

--- a/third_party/blink/renderer/bindings/generated_in_core.gni

+++ b/third_party/blink/renderer/bindings/generated_in_core.gni

@@ -997,6 +997,8 @@ generated_interface_sources_in_core = [

"$root_gen_dir/third_party/blink/renderer/bindings/core/v8/v8_location.h",

"$root_gen_dir/third_party/blink/renderer/bindings/core/v8/v8_mathml_element.cc",

"$root_gen_dir/third_party/blink/renderer/bindings/core/v8/v8_mathml_element.h",

+ "$root_gen_dir/third_party/blink/renderer/bindings/core/v8/v8_media_decoder_config.cc",

+ "$root_gen_dir/third_party/blink/renderer/bindings/core/v8/v8_media_decoder_config.h",

"$root_gen_dir/third_party/blink/renderer/bindings/core/v8/v8_media_error.cc",

"$root_gen_dir/third_party/blink/renderer/bindings/core/v8/v8_media_error.h",

"$root_gen_dir/third_party/blink/renderer/bindings/core/v8/v8_media_list.cc",diff --git a/third_party/blink/renderer/bindings/idl_in_core.gni b/third_party/blink/renderer/bindings/idl_in_core.gni

index bccaa0392bd7c..179f601947be6 100644

--- a/third_party/blink/renderer/bindings/idl_in_core.gni

+++ b/third_party/blink/renderer/bindings/idl_in_core.gni

@@ -435,6 +435,7 @@ static_idl_files_in_core = get_path_info(

"//third_party/blink/renderer/core/html/media/html_audio_element.idl",

"//third_party/blink/renderer/core/html/media/html_media_element.idl",

"//third_party/blink/renderer/core/html/media/html_video_element.idl",

+ "//third_party/blink/renderer/core/html/media/media_decoder_config.idl",

"//third_party/blink/renderer/core/html/media/media_error.idl",

"//third_party/blink/renderer/core/html/portal/html_portal_element.idl",

"//third_party/blink/renderer/core/html/portal/portal_activate_event.idl",定义 mediaconfigchange 事件

为了支持 media source 变化时监听媒体信息的变化,我们需要新增一个mediaconfigchange事件,并支持onmediaconfigchange属性,用于绑定钩子函数。

// 创建一个video标签,或者audio标签

const media = document.createElement('video');

const cb = (e: Event) => console.log('媒体信息发生变化', media.getMediaConfig());

// 添加监听

media.addEventListener('mediaconfigchange', cb)

// 或者这样添加监听

media.onmediaconfigchange = cb;diff --git a/third_party/blink/renderer/core/dom/global_event_handlers.h b/third_party/blink/renderer/core/dom/global_event_handlers.h

index 63d3e344b46a1..7c23f4fab9265 100644

--- a/third_party/blink/renderer/core/dom/global_event_handlers.h

+++ b/third_party/blink/renderer/core/dom/global_event_handlers.h

@@ -80,6 +80,7 @@ class GlobalEventHandlers {

DEFINE_STATIC_ATTRIBUTE_EVENT_LISTENER(loadstart, kLoadstart)

DEFINE_STATIC_ATTRIBUTE_EVENT_LISTENER(lostpointercapture,

kLostpointercapture)

+ DEFINE_STATIC_ATTRIBUTE_EVENT_LISTENER(mediaconfigchange, kMediaconfigchange)

DEFINE_STATIC_ATTRIBUTE_EVENT_LISTENER(mousedown, kMousedown)

DEFINE_STATIC_ATTRIBUTE_EVENT_LISTENER(mouseenter, kMouseenter)

DEFINE_STATIC_ATTRIBUTE_EVENT_LISTENER(mouseleave, kMouseleave)diff --git a/third_party/blink/renderer/core/dom/global_event_handlers.idl b/third_party/blink/renderer/core/dom/global_event_handlers.idl

index c7657dde82327..3858e98d69a5c 100644

--- a/third_party/blink/renderer/core/dom/global_event_handlers.idl

+++ b/third_party/blink/renderer/core/dom/global_event_handlers.idl

@@ -66,6 +66,7 @@

attribute EventHandler onloadeddata;

attribute EventHandler onloadedmetadata;

attribute EventHandler onloadstart;

+ attribute EventHandler onmediaconfigchange;

attribute EventHandler onmousedown;

[LegacyLenientThis] attribute EventHandler onmouseenter;

[LegacyLenientThis] attribute EventHandler onmouseleave;diff --git a/third_party/blink/renderer/core/events/event_type_names.json5 b/third_party/blink/renderer/core/events/event_type_names.json5

index fcb366c160ed4..210b2d051fef1 100644

--- a/third_party/blink/renderer/core/events/event_type_names.json5

+++ b/third_party/blink/renderer/core/events/event_type_names.json5

@@ -182,6 +182,7 @@

"lostpointercapture",

"managedconfigurationchange",

"mark",

+ "mediaconfigchange",

"message",

"messageerror",

"midimessage",diff --git a/third_party/blink/renderer/core/html/html_element.cc b/third_party/blink/renderer/core/html/html_element.cc

index 46185c325204f..316458201959b 100644

--- a/third_party/blink/renderer/core/html/html_element.cc

+++ b/third_party/blink/renderer/core/html/html_element.cc

@@ -583,6 +583,8 @@ AttributeTriggers* HTMLElement::TriggersForAttributeName(

event_type_names::kTouchstart, nullptr},

{html_names::kOntransitionendAttr, kNoWebFeature,

event_type_names::kWebkitTransitionEnd, nullptr},

+ {html_names::kOnmediaconfigchangeAttr, kNoWebFeature,

+ event_type_names::kMediaconfigchange, nullptr},

{html_names::kOnvolumechangeAttr, kNoWebFeature,

event_type_names::kVolumechange, nullptr},

{html_names::kOnwaitingAttr, kNoWebFeature, event_type_names::kWaiting,

```C++

定义videoconfig与audioconfig属性

继续按照其他blink的属性实现方式,将我们需要的videoconfig和audioconfig以及onmediaconfigchange属性,添加到html\_attribute\_names.json5内。

diff --git a/third_party/blink/renderer/core/html/html_attribute_names.json5 b/third_party/blink/renderer/core/html/html_attribute_names.json5

index 04210d127dab1..6c4d0924101a9 100644

--- a/third_party/blink/renderer/core/html/html_attribute_names.json5

+++ b/third_party/blink/renderer/core/html/html_attribute_names.json5

@@ -29,6 +29,7 @@

"attributionreportto",

"attributionsourceeventid",

"attributionsourcepriority",

+ "audioconfig",

"autocapitalize",

"autocomplete",

"autocorrect",

@@ -205,6 +206,7 @@

"onloadedmetadata",

"onloadstart",

"onlostpointercapture",

+ "onmediaconfigchange",

"onmessage",

"onmessageerror",

"onmousedown",

@@ -338,6 +340,7 @@

"vlink",

"vspace",

"virtualkeyboardpolicy",

+ "videoconfig",

"webkitdirectory",

"width",

"wrap",代码与使用示例

// 创建一个video标签,或者audio标签

const media = document.createElement('video');

const cb = (e: Event) => console.log('Media Config Changed:', video.getMediaConfig());

// 添加监听

media.addEventListener('mediaconfigchange', cb)

// 或者这样添加监听

media.onmediaconfigchange = cb;

// 设定src

// [CONSOLE]

// Media Config Changed:MediaDecoderConfig {totalBitrate: 11163352, videoBitrate: 11035352, videoCodec: 'mpeg4', videoProfile: 'unknown', videoLevel: 'not available', …}

media.src = 'https://a.b.c/xxx.avi';

// 重设src

// [CONSOLE]

// Media Config Changed:MediaDecoderConfig {totalBitrate: 13115756, videoBitrate: 12943517, videoCodec: 'hevc', videoProfile: 'hevc main', videoLevel: 'not available', …}

media.src = 'https://a.b.c/yyy.mp4';

修改 Source 示例

监听变化示例

总结

如上,为 Chromium 实现以上类似 MediaConfig 的私有 API,需要:

- 可以落地的场景,比如 Electron 或 CEF 客户端。

- 掌握 Chromium 的编译,调试,快速 Debug 的步骤。

- 明确想要是什么,在哪里实现。

- 反复不断的编译与尝试。

若都满足,就可以自己尝试实现一下啦。